Note: I orginally published this post on OCSL’s blog, but am re-creating here for posterity

In part 1, we took an initial look at what VMQ is, how it works and why it is good. I also spectacularly failed to explain what was causing the error for the customer, which I now intend to rectify.

VMQ on a single physical NIC are reasonably straightforward; each VMQ is assigned to a specific queue, which is in turn assigned to a particular CPU core. When we introduce NIC teaming into the mix, packets for the same VM may be load-balanced across different NICs, and thus end up seeing different queues, and possibly not being able to access their VMQ.

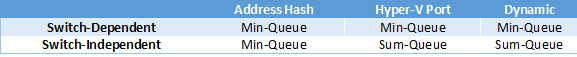

To try and resolve this issue, the number of available physical queues on the NIC team is reported to the OS in different ways depending on which teaming and load-balancing method you use: Min-Queue or Sum-Queue. The table below details how the reporting mode is chosen:

With Min-Queue, the number of physical queues reported is the same as the NIC with the fewest number of queues i.e. if you have one NIC with 4 physical queues and one NIC with 8 physical queues, Min-Queue mode will report only 4 physical queues on the team. This is because traffic for a particular VM, and therefore a VMQ, may come in on either NIC, and so that VMQ must be present on all NICs in the team in order to deal with it. As a result of this, each NIC in the team must have access to the same set of CPU cores, which should be configured by using the Set-NetAdapterVmq cmdlet.

Sum-Queue mode is only used when the teaming mode is Switch-Independent and the load balancing algorithm is either Hyper-V Port or Dynamic. With these magic combinations, the number of physical queues reported is the total available on all NICs, as the traffic for the VM (and VMQ) will always arrive on the same member of the NIC team. As opposed to Min-Queue mode, with Sum-Queue each NIC in the team must use a different set of CPU cores in order to avoid conflict.

This leads nicely back to the original error: the NICs were configured using Switch-Independent teaming, and with the Hyper-V Port load balancing method, which resulted in the team using sum-queue mode, but the CPU cores hadn’t been separated out. Luckily, all it took was a couple of commands to configure the NICs to use separate sets of CPU cores (I love PowerShell!), and the problem was resolved. Unfortunately, my coffee had gone cold by this point, but the warm glow of a job well done made up for it.

References: