Note: I orginally published this post on OCSL’s blog, but am re-creating here for posterity

I was sipping my morning coffee the other day, when an email rolled in.

Not unusual in itself, but a customer was having a rather strange error on one of their Hyper-V 2012 hosts:

Source: Microsoft-Windows-Hyper-V-VmSwitch

Description: Available processor sets of the underlying physical NICs belonging to the LBFO team NIC /DEVICE/{E01F00F8-53A0-42B8-B998-511E2BBFE6E7} (Friendly Name: Microsoft Network Adapter Multiplexor Driver #2) on switch 73249AB1-83FF-407B-B80C-A67F56989F6A (Friendly Name: Production Logical Switch) are not configured correctly.

Reason: The processor sets overlap when LBFO is configured with sum-queue mode.

This error is a result of a feature introduced in Windows Server 2008 R2 called Virtual Machine Queue (VMQ). VMQ is an attempt to translate a feature of the physical network into the virtual world.

Typical physical NICs deal with network traffic by placing it into a suitable queue, which is then dealt with by a particular core of the CPU, thereby balancing the computational cost of networking across the available CPU cores in a system; this is known as Receive Side Scaling, or RSS.

However cool this feature is, it doesn’t work as soon as you introduce virtual machines into the mix, as the hardware abstraction inherent in all virtual environments prevents the physical NIC from passing the network traffic to the CPU of the VM, and RSS is not possible. In fact, without VMQ, all of the processing is performed on a single CPU core, which limits throughput to about 3.5Gbps.

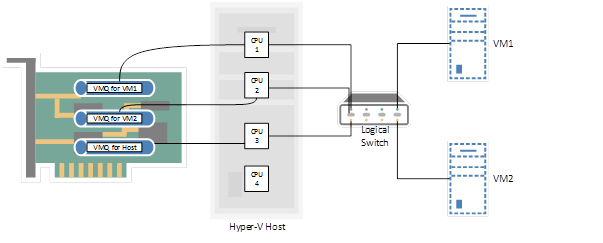

In an attempt to get around this, each VM, and the host itself, is assigned a VMQ using their MAC address, and these VMQs are evenly distributed amongst the queues on the physical NIC. This allows the traffic to once again be distributed to multiple cores, thus allowing greater overall throughput. In Windows Server 2012, the VMQs can even be redistributed across the physical queues depending on networking bandwidth and CPU load!

One downside of using VMQs is that due to the way the physical NIC queues are assigned to, the host and every guest VM is therefore limited to a single physical queue, and therefore a single CPU core for processing, which limits the throughput of a single VM to the same 3.5Gbps as above. Nevertheless, this is definitely better than your entire server being limited to 3.5Gbps, so I’d count that as a win.

It is worth noting that by default, VMQ is enabled and RSS is disabled as soon as you assign a NIC to a virtual switch. However, this is only on a per-NIC basis, so it is perfectly possible to have a NIC team for your guest VMs, then a separate physical NIC for your Live Migration to allow the full potential network bandwidth.

“That’s all very well, but how does this relate to the customer’s error?” I hear you cry. Well, the really fun stuff happens when you bring NIC teaming into play. Join me in part 2 for the exciting conclusion…